Description

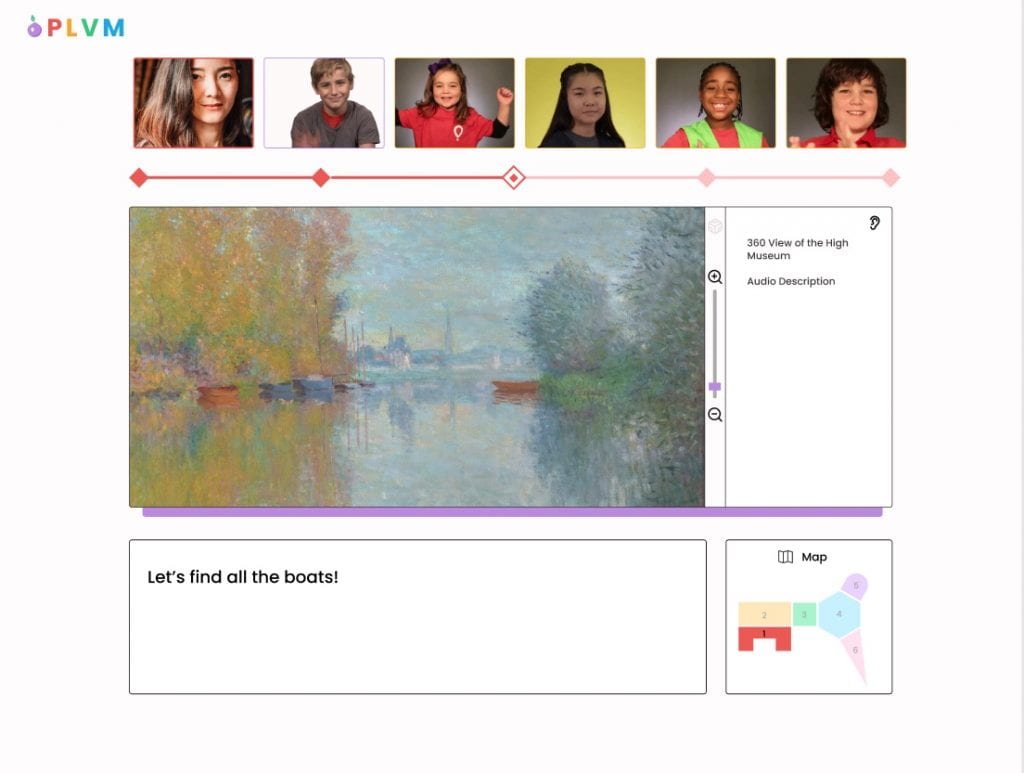

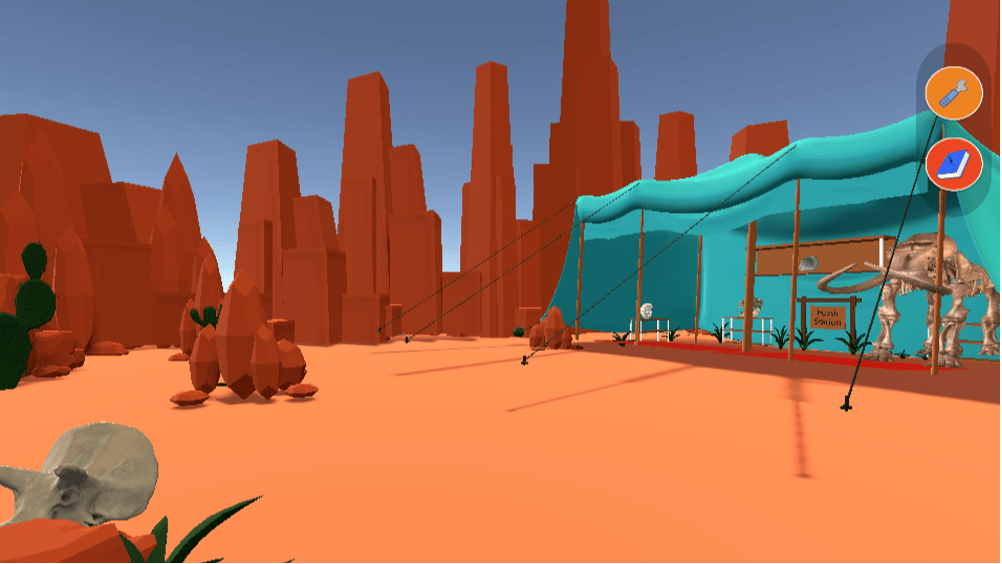

Although artificial intelligence (AI) is playing an increasingly large role in mediating human activities, most education about what AI is and how it works is restricted to computer science courses. This research is a collaboration between the TILES lab, the Expressive Machinery Lab (Dr. Brian Magerko, Georgia Tech), and the Creative Interfaces Research + Design Studio (Dr. Duri Long, Northwestern University) to create a set of museum exhibits aimed at teaching fundamental AI concepts to the public. In particular we aim to reach middle school girls and students from groups who are underrepresented in computer science.

This 4-year project is funded by the NSF Advancing Informal STEM Learning (AISL) program (NSF DRL #2214463). We are collaborating with the Museum of Science and Industry in Chicago to conduct focus groups, needs assessments, and pilot testing of exhibit designs based off our prior work.

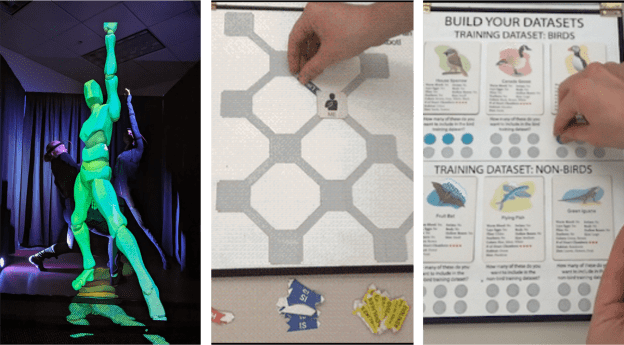

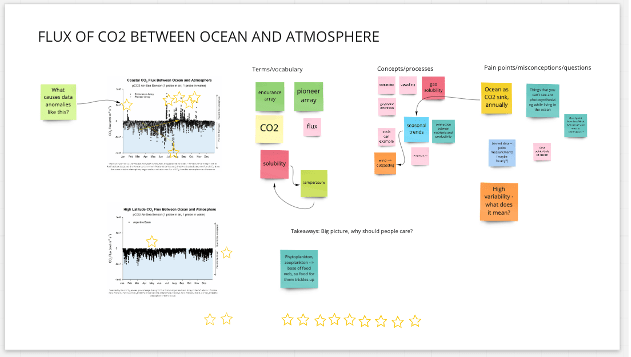

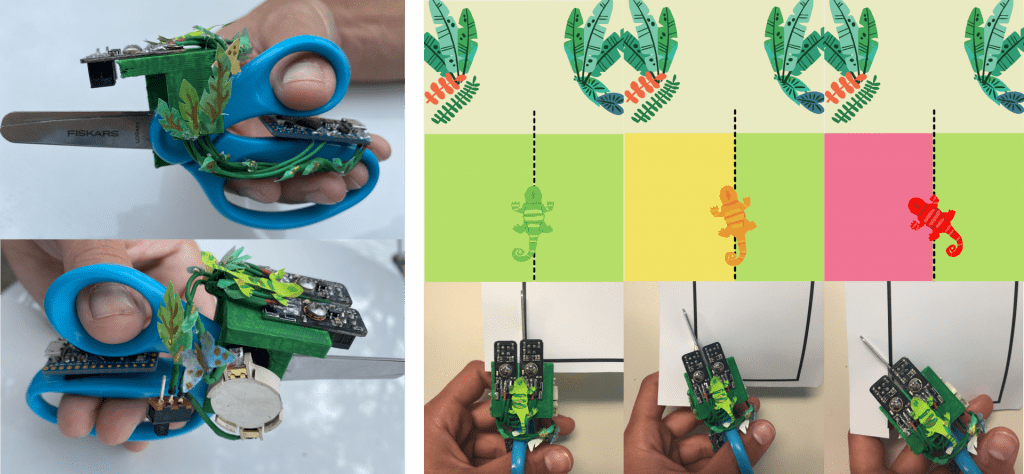

This research will explore how embodiment and co-creativity can help learners make sense of and engage with AI concepts.

Publications

- Kafai, Y. B., Proctor, C., Cai, S., Castro, F., Delaney, V., DesPortes, K., Hoadley, C., Lee, V. R., Long, D., Magerko, B., Roberts, J., Shapiro, B. R., Tseng, T., Zhong, V., & Rosé, C. P. (2024). What Does it Mean to be Literate in the Time of AI? Different Perspectives on Learning and Teaching AI Literacies in K-12 Education. In Lindgren, R., Asino, T. I., Kyza, E. A., Looi, C. K., Keifert, D. T., & Suárez, E. (Eds.), Proceedings of the 18th International Conference of the Learning Sciences – ICLS 2024 (pp. 1856-1862). International Society of the Learning Sciences. https://repository.isls.org//handle/1/10828

- Yasmine Belghith, Atefeh Mahdavi Goloujeh, Brian Magerko, Duri Long, Tom Mcklin, and Jessica Roberts. 2024. Testing, Socializing, Exploring: Characterizing Middle Schoolers’ Approaches to and Conceptions of ChatGPT. In Proceedings of the CHI Conference on Human Factors in Computing Systems (CHI ’24). Association for Computing Machinery, New York, NY, USA, Article 276, 1–17. https://doi.org/10.1145/3613904.3642332

- Duri Long, Sophie Rollins, Jasmin Ali-Diaz, Katherine Hancock, Samnang Nuonsinoeun, Jessica Roberts, and Brian Magerko. 2023. Fostering AI Literacy with Embodiment & Creativity: From Activity Boxes to Museum Exhibits. In Proceedings of the 22nd Annual ACM Interaction Design and Children Conference (IDC ’23). Association for Computing Machinery, New York, NY, USA, 727–731. https://doi.org/10.1145/3585088.3594495