Description

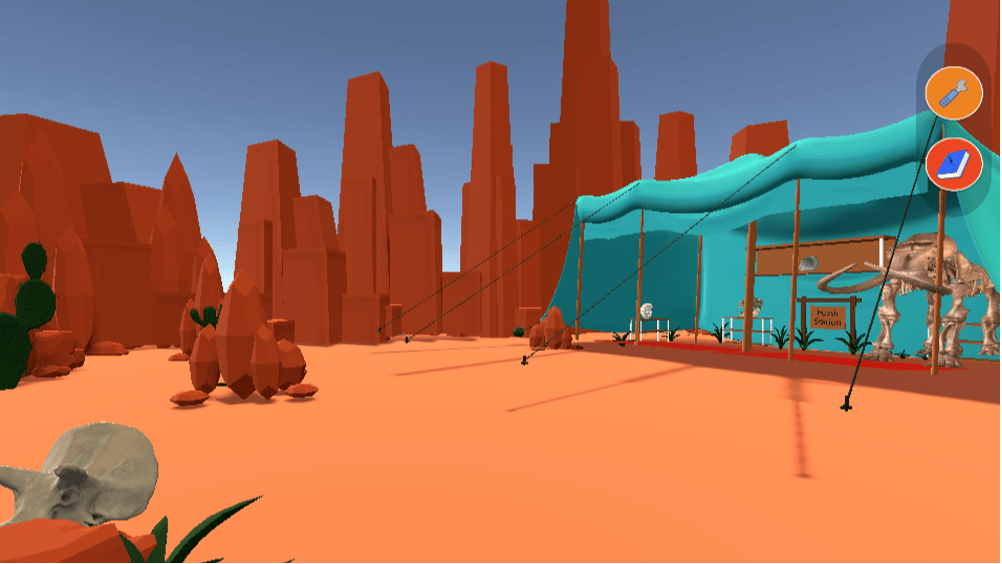

Current Crisis is a vertically integrated project developed at Georgia Tech Institute of Technology in collaboration with the Vertically Integrated Projects (VIP) group. The project focuses on creating a simulation-style video game designed and developed by undergraduate students, which puts the player in the role of a power system operator tasked with managing the grid during extreme events like wildfires and hurricanes. The project aims to use gamified learning to teach about the critical power grid infrastructure and sustainability. Previously, the VIP partnered with Dr. Jessica Roberts and the Tiles lab to display a game demo at the Dataseum in the Crosland exhibition space. Currently, Tiles Lab is collaborating again to measure the learning outcomes and the overall game efficiency towards learning.

Access the VIP group website and the game for more information.

Sponsor(s): The video games will complement work for the NSF-funded AI Institute for Advances in Optimization (AI4Opt, www.ai4opt.org) and research efforts at Sandia National Laboratories.

Research Team

VIP Group and Tiles Lab (Jessica Roberts and Stella Quinto Lima)